AI Act, the law governing AI: Implications and strategic directions

Reading time : 5 minutes

Artificial intelligence is seeing increasing adoption across the globe, transforming entire industries and disrupting the way businesses operate. In 2020, Gartner estimated that only 13% of organizations were at the high end of its AI maturity model, but this proportion continues to grow. The Boston Consulting Group (BCG) also highlights in its latest report that “most companies globally are making steady progress” in this area. With this rise in power and growing impact of AI, the need for a regulatory framework has become paramount. It is in this context that the European Union introduced the Artificial Intelligence Regulation, or AI Act, which aims to regulate the development and use of AI while protecting the rights of European citizens.

What is the AI Act?

Why is the AI Act crucial in Europe?

Europe stands out for its approach focused on human rights and the protection of personal data. The AI Act is crucial because it extends these principles to the field of artificial intelligence, seeking to prevent potential abuses and ensure that AI technologies are used ethically and responsibly.

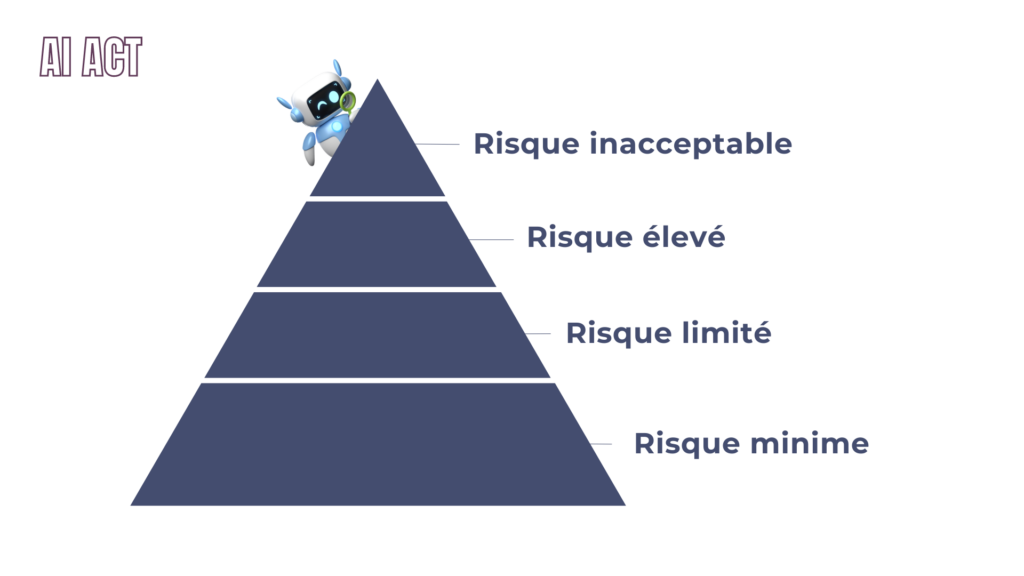

Classification of AI systems according to risk

AI systems are categorized into four risk levels: minimal, limited, high, and unacceptable. Each level requires compliance measures proportional to the potential impact on citizens and businesses.

- Minimal Risk: Low-risk AI systems, such as spam filters or recommendation systems, are mostly exempt from regulatory obligations. They only require basic transparency, such as informing users about the use of AI.

- Limited Risk: Limited-risk AI systems, such as chatbots or certain speech recognition tools, must comply with additional transparency requirements. Users must be informed that they are interacting with an AI.

- High Risk: High-risk systems, such as those used in critical sectors like healthcare, infrastructure, or security, are subject to strict requirements. These systems must undergo rigorous assessment before deployment, including risk management, compliance audits, and ensuring accuracy and reliability.

- Unacceptable Risk: AI systems posing unacceptable risk, such as those used for mass surveillance or psychological manipulation, are prohibited due to their potential threat to fundamental rights and public safety.

Vous ne savez pas dans quel niveau de risque vous situer ? Faites le test dès maintenant.

Challenges and implications for businesses

The adoption of the AI Act poses significant challenges for businesses, particularly in terms of compliance and technological adaptation.

While large companies may have the resources to adapt quickly, startups may find these regulations an obstacle to innovation and growth. However, they could also represent an opportunity to differentiate themselves through ethical and transparent AI practices.

Compliance Strategies for AI Stakeholders Companies will need to develop compliance strategies, possibly by integrating dedicated AI risk management and data governance teams, to effectively comply with the AI Act.

Social and ethical impact of the AI Act

The impact of the AI Act goes far beyond its technical and commercial implications; it also raises profound social and ethical questions aimed at protecting individuals’ fundamental rights. At the heart of the AI Act, promoting the ethical use of AI is essential. This includes safeguards against discrimination, respect for privacy, and ensuring that automated decisions are fair, transparent, and subject to human appeal when necessary. By imposing high standards for AI systems, the AI Act seeks to protect European citizens from potential abuses such as unregulated mass surveillance or algorithmic bias that could negatively affect their lives.

AI Act and technological innovation

Although the regulatory framework may initially appear restrictive, it is designed to stimulate responsible and secure innovation in the field of AI. One of the key challenges of the AI Act is to strike a balance between facilitating technological innovation and applying the necessary controls to prevent risks associated with AI.

This challenge is crucial to maintaining the EU’s competitiveness in the global digital economy, while ensuring that innovation respects European values. The regulatory framework of the AI Act could serve as a catalyst for innovation, incentivizing companies to develop AI technologies that are not only advanced but also comply with high ethical standards. This could open up new markets and opportunities for AI products and services that are both safe and ethically responsible.

Conclusion on the EU AI Act

The EU’s AI Act is an ambitious legislative initiative designed to regulate the use of artificial intelligence through an approach that balances regulatory prudence and fostering innovation. While challenges remain, particularly in terms of implementation and the impact on small businesses, the potential benefits in terms of protecting individual rights and promoting ethical AI are considerable.

The AI Act Regulation is an important legislative framework to ensure that Europe remains at the forefront of technological innovation while protecting its citizens from the potential risks of AI. It is an initiative that could well shape the future of AI globally.

For businesses, policymakers, and European citizens, it is essential to stay informed about developments in the AI Act, participate in discussions, and actively prepare the necessary adaptations to maximize the benefits of AI while minimizing its risks.